Speech to Text: Difference between revisions

No edit summary |

|||

| Line 59: | Line 59: | ||

==Progress so far== |

==Progress so far== |

||

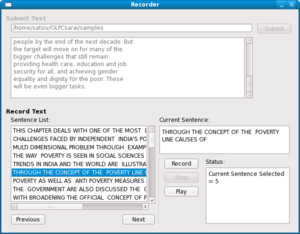

[[Image:RecorderApplication.png|thumb|right|Screenshot of the Recorder Application]] |

[[Image:RecorderApplication.png|thumb|right|Screenshot of the Recorder Application]] |

||

| ⚫ | |||

# On further discussions with OLPC, it was decided to not build a single activity which would be capable of handling speech input. Instead a system-wide speech input was preferred. For example Listen-And-Spell activity can use the voice model to voice type ( building acoustic model for this purpose is a comparative easy task as the alphabet set in any language is limited) and use Write activity for dictation. |

|||

# Recorded 20 hours of Speech. (Indian English and Hindi) |

# Recorded 20 hours of Speech. (Indian English and Hindi) |

||

# Using the CBSE books for the corpus. |

# Using the CBSE books for the corpus. |

||

# Made an acoustic model for English alphabets and tested on sugar. |

|||

| ⚫ | |||

# Recorded Hindi alphabets. |

|||

# Learnt DBUS basics to integrate Julius and the Dictation activity that will be built. |

|||

# Built an application using Qt to speed up the recording progress. It takes paragraphs as inputs, breaks them up as chunks of 10 words. In the background the text is stored as numbered samples as required to create the HTK model. The front end gives choices of saving the recording getting the previous sentence or going to the next sentence (shown in the window along with the progress)in addition to the audacity buttons for recording playing etc. |

# Built an application using Qt to speed up the recording progress. It takes paragraphs as inputs, breaks them up as chunks of 10 words. In the background the text is stored as numbered samples as required to create the HTK model. The front end gives choices of saving the recording getting the previous sentence or going to the next sentence (shown in the window along with the progress)in addition to the audacity buttons for recording playing etc. |

||

# Finding Hindi Lexicon and Grammar files for converting my recorded samples to an Acoustic Model. |

|||

# Porting HindiASR? model to Julius. |

|||

# Got an X11 development environment running on sugar. |

|||

# Writing a few scripts to get the microphone+Julius to emulate the keyboard input to X (the OLPC preferred this over the speech library + DBUS combination). |

|||

==Developers== |

==Developers== |

||

Latest revision as of 08:46, 9 June 2009

Synopsis

The project involves bringing Speech to Text support in OLPC while keeping in mind the specific needs of children.

Due to space and power concerns we do not, as of now, have this useful tool. However, the discussions on the devel list[1] showed that because our intended end-users are children, we can afford to slightly compromise the quality of the engine.Some arguments to support this view are:

- Even a sub-optimal implementation (in terms of accuracy) for children will suffice for starters. As children learn to talk in whatever seems to get the job done, they adapt very well.

- Full Speech to Text may be an overkill. They will hardly need the advanced support. In fact a simple 'dictation' based implementation will suffice.

To showcase the potential uses of the engine,

- A dictation activity will be built.

- A 'command and control' tool will also be built to enable the child to use the XO by speaking out commands like 'open <activity name>'

Some other uses that were discussed on the list and off-list are:

- Speaking activity. Seeing the letters appear as you speak and being able to realize the shape and form of basic words.

- It can be used as an aid in the 'translate' activity where the children translate other activities and share with other children.

The actual potential of this engine can only be realized when a substantial step is taken to provide a real-world, user-friendly implementation.

Plans for Implementation

The idea is to port an existing open-source speech to text engine to OLPC as a starter.Technically, Julius and Sphinx seem to be the best choices. VoxForge supports both of them and they both are widely used. Sphinx comes in different flavors quite confused by version numbers. Most notable are Sphinx 3 and 4. Sphinx 3 was written in C and later Sphinx 4 was released as a complete rewrite in Java. Sphinx 4, I believe is not a viable option due to lack of proper Java support and somewhat heaviness of Java applications.Julius is also written in C and is the main contender against Sphinx 3. Some points in favor of Julius are:

- Julius is better suited for dictation purposes which is what we are looking for here.

- Simon project has done some research to rate the Speech to Text engines. Since they have practically tried it, Julius seems to have scored off well.

- Testing of Julius on various machines (and different OSes) showed that Julius needs no additional configuration for installation.

The main tasks that will be involved are:

- Port Julius to XO: This will involve building Julius on XO and clearing out all missing dependencies. As Julius works in "almost real time" on "modern PCs" , the code will need some optimizations to make it run well on XO. This can be done through either algorithmic optimizations or by using existing signal processing libraries that use fast SIMD instructions (MMX, 3Dnow!, etc). to ease on the signal processing. A discussion with Mr. John Gilmore in this regard was very helpful where he suggested that I may use the GNU Radio software that contains a good library that can be used, or improved, for this purpose.

- Build the dictation activity that can serve as a proof of concept.

- Build the 'command and control' tool that enables the child to operate the laptop with speech commands.

Plans for Localization

- The first step would be to get it working for Japanese (The only language that Julius currently supports) or English(using VoxForge).

- The next step that is of particular interest from the Indian perspective is support for Hindi. Currently, there are two ways of doing so:

- HindiASR integration: HindiASR uses a Hindi model that is currently compatible with Sphinx 4. This needs to be ported to Julius.

- Collecting Hindi voice samples: What is needed to get Julius to understand a different language is a collection of human speeches and matching transcript. The samples will preferably be that of children to suit our purpose the best.

References

- Slashdot article, http://linux.slashdot.org/article.pl?sid=06/10/10/1953216&from=rss

- Julius, http://sourceforge.jp/projects/julius

- CMU Sphinx, http://cmusphinx.sourceforge.net

- Simon, http://sourceforge.net/projects/speech2text/

- VoxForge, http://www.voxforge.org/

- HindiASR, http://sourceforge.net/projects/hindiasr

- Discussions on the devel mailing list of OLPC, http://lists.laptop.org/pipermail/devel/2008-September/019136.html

- Sphinx Flavors, http://en.wikipedia.org/wiki/CMU_Sphinx

- In favour of Julius, http://www.voxforge.org/home/about

- STT Comparisons, http://simon-listens.org/index.php?id=124&L=1

Progress so far

- Successfully compiled Julius on XO. Needed gcc, perl, and flex for the same.

- On further discussions with OLPC, it was decided to not build a single activity which would be capable of handling speech input. Instead a system-wide speech input was preferred. For example Listen-And-Spell activity can use the voice model to voice type ( building acoustic model for this purpose is a comparative easy task as the alphabet set in any language is limited) and use Write activity for dictation.

- Recorded 20 hours of Speech. (Indian English and Hindi)

- Using the CBSE books for the corpus.

- Made an acoustic model for English alphabets and tested on sugar.

- Recorded Hindi alphabets.

- Built an application using Qt to speed up the recording progress. It takes paragraphs as inputs, breaks them up as chunks of 10 words. In the background the text is stored as numbered samples as required to create the HTK model. The front end gives choices of saving the recording getting the previous sentence or going to the next sentence (shown in the window along with the progress)in addition to the audacity buttons for recording playing etc.

- Finding Hindi Lexicon and Grammar files for converting my recorded samples to an Acoustic Model.

- Porting HindiASR? model to Julius.

- Got an X11 development environment running on sugar.

- Writing a few scripts to get the microphone+Julius to emulate the keyboard input to X (the OLPC preferred this over the speech library + DBUS combination).

Developers

- Satya Komaragiri (satya.komaragiri@gmail.com, IRC nick mavu )