ColingoXO: Difference between revisions

| Line 76: | Line 76: | ||

There are two ways to develop for OLPC: |

There are two ways to develop for OLPC: |

||

* Creating an [[activity]] |

* Creating an [[activity]] |

||

** ColingoXO is our activity. Activities are robust,[[python]] frameworks that |

** ColingoXO is our activity. Activities are robust, [[python]] frameworks that enable [[programming the camera]], creating databases with [[SQLite]], etc. |

||

* Creating [[content bundles]] |

* Creating [[content bundles]] |

||

** A content bundle is a curated collection of content viewable from the [[library]]. They are limited in functionality and primarily intended to house content. |

** A content bundle is a curated collection of content viewable from the [[library]]. They are limited in functionality and primarily intended to house content. |

||

===Our content bundles=== |

===Our content bundles=== |

||

Revision as of 20:35, 4 December 2007

see more templates or propose new |

Colingo is developing an activity for Sugar called ColingoXO. ColingoXO creates a platform for constructivist language-learning by letting children splice video clips to create and share video narratives. Children will be able to use all video clips from the Colingo video library or alternatively record new clips with the XO camera and microphone.

Beyond its role as a simple video editor, ColingoXO will focus on taking advantage of the XO's mesh capabilities. Videos will not be housed on individual XO's, but rather be housed and streamed from the School server. Video narratives, which are essentially XSPF playlists, will be kept locally in the Journal and be easily passed through the mesh due to their lightweight, text-based format.

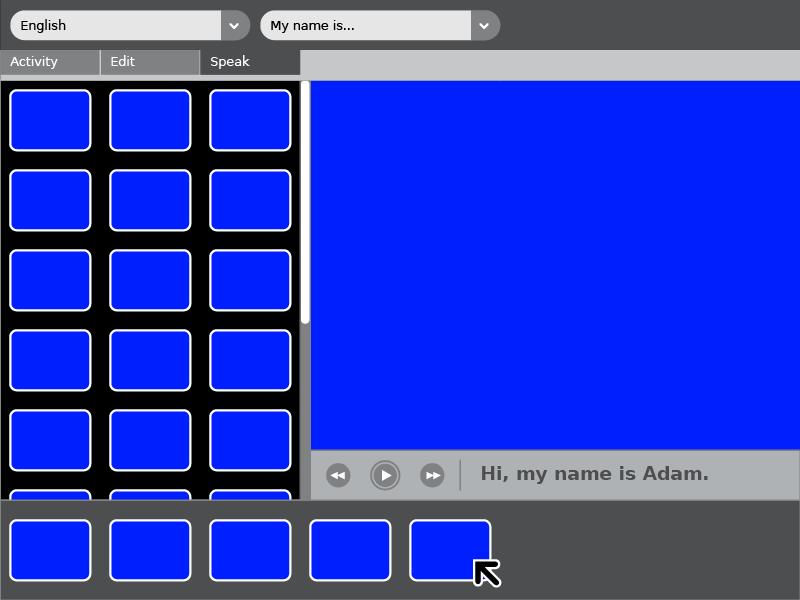

Interface

This interface design is by Eben Eliason, a lead UI designer for the OLPC project and the primary author of the OLPC Human Interface Guidelines. Please post comments on the discussion page, or ping lionstone in #olpc-content.

Interface description

The comboboxes at the top allow one to simply switch the language or select a phrase. Typing some words directly into the phrase box will simply filter out the results. The video bank on the left contains all of the videos which match the filter in the toolbar for language and phrase. It scrolls for easy navigation, and holds up to 15 clips before needing to scroll at all.

The timeline is the main interactive component, since it defines the dialogue for the session. It is shown as using a standard (not quite finished) Tray control, which will automatically provide drag'n'drop support, reordering, paging, and assist in supporting networked collaboration on the timeline. This will make it easy for kids to actually create back and forth dialogue with each other, alternately selecting video clips, and in the end having a record of that conversation which they can play back in any language.

The video consumes the rest of the screen, and is a fair bit larger.

Beneath the video there are some basic controls. The play/stop button is obvious. The next and previous buttons will skip to the various video clips in the sequence, like tracks on a CD. Finally, the text beneath the video updates in real time to provide the written form of the phrase being spoken during playback.

Specifications

There are four programmatic arenas we must focus on in developing ColingoXO: how the user interacts with the XO, how one XO interacts with other XOs, how an XO interacts with the XS, and how the XS interacts with the rest of the world. Our primary focus right now is on the user interface on the XO and how the XO interacts with the server. Our secondary focus is on how the XOs interact with one another, and our tertiary focus is on how the XS interacts with the rest of the world.

User -> XO

From a programmatic perspective, the interface for the user must:

- read/display available video files

- search the library of videos based on a user's specified language, and return appropriate videos in the specified language

- create/save video clips

- create/save playlists built by dragging/dropping videos onto a "timeline"

- specify videos/playlists as shareable or private

- playback individual videos and playlists

- allow control via keyboard, mouse and integrated gamepad

XO -> XS

Like the XO, the XS is still undergoing heavy development, and not all of the software that will run on these machines has been wholly settled on just yet. This makes our task a little more difficult, but it should push us to build our software with greater flexibility in mind. We are still trying to determine which SQL driven database will be running on the XS (we have been told that it will probably be MySQL), and how/where on the XS videos will be stored/streamed is undetermined. Regardless, our specs are as follows:

- XO must be able to write and read video files/playlists to/from the XS

- XS must be able to stream the same video(s) simultaneously to multiple XOs

- XS will host a database containing metadata about each video clip, including bridging videos about the same thing across all languages

- XS and its database must have support for any character set (from Arabic to Zapotec!)

XO -> XO

The collaborative aspect of the XOs is where these machines really shine. Our activity should embody an ethos of collaboration and take full advantage of the mesh networking capabilities of the XO.

- Read/write shared files

- Collaborate in realtime on shared playlists

- Support more than one XO on one XO - groups of XOs collaborating on one project

- Provide visual signatures on clips/playlists (perhaps a watermark of the student's colored XO character)

- Some form of communication beyond just manipulating files (ie bridge with the chat activity)

XS -> World

Each XS will host its own library of videos developed by the students, as well as initial seeded content provided by Colingo. On the OLPC wiki, there is talk about regional XSs talking with one another, as well as XSs connected with the internet sharing content to the world.

- XSs should be able to share video content and playlists with the rest of the world.

- Video content from the XSs should be available to colingo.org, which will be the portal through which these videos are shared.

- XS should be able to pull content (videos) from colingo.org to share with the students.

- XS should be able to share their metadata (ie translations, associations of clips with languages) with one another and colingo.org to build a global decentralized database.

Activity vs Content Bundle

There are two ways to develop for OLPC:

- Creating an activity

- ColingoXO is our activity. Activities are robust, python frameworks that enable programming the camera, creating databases with SQLite, etc.

- Creating content bundles

- A content bundle is a curated collection of content viewable from the library. They are limited in functionality and primarily intended to house content.

Our content bundles

On November 2, 2007, Colingo released four content bundles:

- All our movies

- English-only

- Spanish-only

- Portuguese-only

- We will be releasing more content bundles shortly,

- Lessons 2 - 10 of basic English

- Lessons 2 - 10 of basic Spanish

- Lessons 2 - 10 of basic Chinese

Please help test our content bundles, and see known issues with them. You can also watch an online demo if your web browser can handle OGG theora video.

Get involved!

We need your help to create a revolutionary educational tool completely in line with the XO's constructivist educational philosophy. If you are committed the ideals of Free Software and are interested in helping to develop a platform for global language exchange, please say hello in IRC, check out our dev environment and learn how to become a Colingo developer.

Code

Checking out code from Colingo

Note (8/23/07): We are currently hosting ColingoXO code on the Colingo development server. Please see the instructions about how to check out code from the ColingoXO repository.

Our sugarized SVG activity icon

Integrates cleanly into the activity bar and the Home view activity ring.

Integrates cleanly into the activity bar and the Home view activity ring.

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<!-- Created with Inkscape (http://www.inkscape.org/) -->

<!-- by Ben Lowenstein 10-08-07 -->

<!DOCTYPE svg PUBLIC "-//W3C//DTD SVG 1.1//EN" "http://www.w3.org/Graphics/SVG/1.1/DTD/svg11.dtd" [

<!ENTITY fill_color "#FFFFFF">

<!ENTITY stroke_color "#000000">

]>

<svg

xmlns:svg="http://www.w3.org/2000/svg"

xmlns="http://www.w3.org/2000/svg"

version="1.0"

width="55"

height="55"

viewBox="0 0 55 55"

id="svg2160"

xml:space="preserve"><defs

id="defs2183">

</defs><line

style="fill:none;stroke:&stroke_color;;stroke-width:3.38274455;stroke-linecap:round;stroke-linejoin:round;display:inline"

id="line2174"

y2="34.398335"

y1="41.699673"

x2="28.363632"

x1="32.03511"

display="inline" /><path

d="M 80.538794,14.634424 A 3.7837837,5.7432432 0 0 1 82.360937,3.919944"

transform="matrix(4.5103261,1.9208816,0,1.503442,-344.36635,-144.93321)"

style="fill:none;fill-opacity:1;stroke:&stroke_color;;stroke-width:1.99899995;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1"

id="path4147" /><path

d="M 80.538794,14.634424 A 3.7837837,5.7432432 0 0 1 82.360937,3.919944"

transform="matrix(-4.5103261,-1.9208816,0,-1.503442,401.38327,199.09105)"

style="fill:none;fill-opacity:1;stroke:&stroke_color;;stroke-width:1.99899995;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1"

id="path5118" /><line

display="inline"

x1="35.277481"

x2="28.57617"

y1="30.112953"

y2="34.113159"

id="line5120"

style="fill:none;stroke:&stroke_color;;stroke-width:3.38274455;stroke-linecap:round;stroke-linejoin:round;display:inline" /><line

display="inline"

x1="25.064001"

x2="28.735477"

y1="12.559251"

y2="19.86059"

id="line5122"

style="fill:none;stroke:&stroke_color;;stroke-width:3.38274455;stroke-linecap:round;stroke-linejoin:round;display:inline" /><line

style="fill:none;stroke:&stroke_color;;stroke-width:3.38274455;stroke-linecap:round;stroke-linejoin:round;display:inline"

id="line5124"

y2="20.145765"

y1="24.145969"

x2="28.522943"

x1="21.82163"

display="inline" /></svg>

A script for DV capture automagic

This should help OLPC video content creators running linux (only tested on Debian Etch) who have:

- dvgrab

- ffmpeg2theora

- ffmpeg

installed. The shell script will:

- Connect to an attached firewire camera

- Capture all footage on tape and break footage by timestamp

- save all files as oggs (optimized for XO playback), dv, and flv

#!/bin/bash ## Script to take a raw DV file and split it by timestamp ## Robin Walsh 10/2007 echo "Please input the series title. The resulting files will be placed" echo "in a subdirectory by this name." read -e seriestitle; echo "Now please give me a filename prefix for the DV files I'm about to split up." read -e prefix; ## First, let's create the directory structure. mkdir -p $seriestitle/dv $seriestitle/flv $seriestitle/ogg ## Next, lets's grab the DV from the camera and split it by timestamp into AVI files. dvgrab --autosplit --timestamp --format dv2 $seriestitle/dv/$prefix- echo "I can wait here while you check for needed video adjustments." echo "Just hit return when you're ready to convert the grabbed DV into ogg and flv." read -e waiting; ## Here's the for loop that will convert the original AVI files into .flv for f in `ls $seriestitle/dv` do filenameflv=`basename $f .avi` ffmpeg -i $seriestitle/dv/$f -s 320x240 -ar 44100 -r 12 $seriestitle/flv/$filenameflv.flv done ## Here's the for loop that will convert the original AVI files into .ogg for i in `ls $seriestitle/dv` do filenameogg=`basename $i .avi` ffmpeg2theora -x 240 -y 160 -v 5 -a -1 -o $seriestitle/ogg/$filenameogg.ogg $seriestitle/dv/$i done