Ejabberd resource tests/try 3

Try 3: 3000ish clients; past the 500 connection barrier

Eventually I thought to increase the number of open files that ejabberd can use, which allowed it to maintain more than 500 connections. This can be done in a couple of ways: most properly by adding these lines to /etc/security/limits.conf:

ejabberd soft nofile 65535 ejabberd hard nofile 65535

or by putting this line in /etc/init.d/ejabberd:

start() {

+ ulimit -n 65535

echo -n $"Starting ejabberd: "

which shouldn't require a new login to take effect.

I started with about 2900 clients, but hyperactivity increased this as it created new clients. It got to around 3300. The number of inactive clients seems to have relatively little effect on ejabberd, so I'm ignoring this.

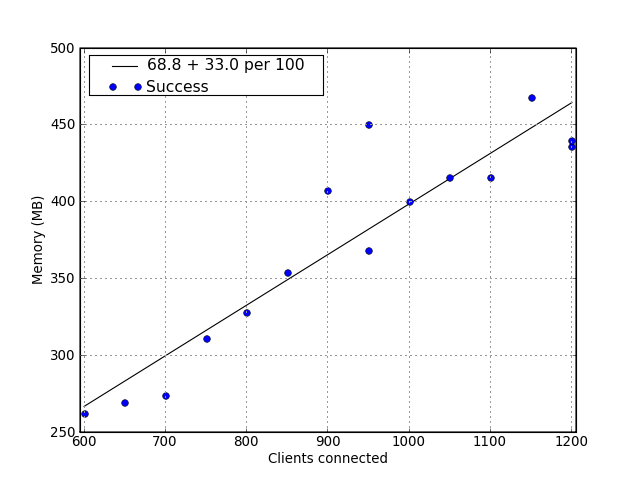

# Starting with 2926 registered users # open files set by ulimit to 65535 # # Clients secs mem load avg client OK server OK 600 15 262 0.75 True True 650 15 269 0.84 True True 700 15 274 1.12 True True 750 15 311 1.32 True True 800 15 328 1.66 True True 850 15 354 1.80 True True 900 15 407 1.83 True True 950 15 368 1.78 True True 950 15 450 1.78 True True 1000 15 400 1.80 True True 1050 15 416 2.06 True True 1100 15 416 2.01 True True 1150 15 468 2.04 True True 1200 15 436 2.07 True True # drop all but 200 clients, wait 5 minutes. # 200 15 371 0.63 True True 1200 15 440 1.89 True True # memory use was really jumpy. These numbers are approximately what # the system converged on over time. # (e.g. 1000 clients peaked over 500M, dropped to 390ish) # # After 800, clients sometimes dropped off.

1200 is about the limit that my test setup can get to (i.e. 4 * 250 + 4 * 50). ejabberd ran quite happily at that point, though the load averages suggest it would not have liked much more.

ejabberd peaked at 667.6MB, and went over 500 several times. These fleeting binges tended to follow the connection of new clients. As hyperactivity connects with unnatural speed, it would unfair to judge ejabberd on those numbers, but it does seem that ejabberd could do with 50% headroom over its long term average.

This set gives us 69MB + 33 per 100, or about 1070MB for 3000, and it suggests that 1.6 GB would accommodate surges. A faster processor is almost certainly necessary.

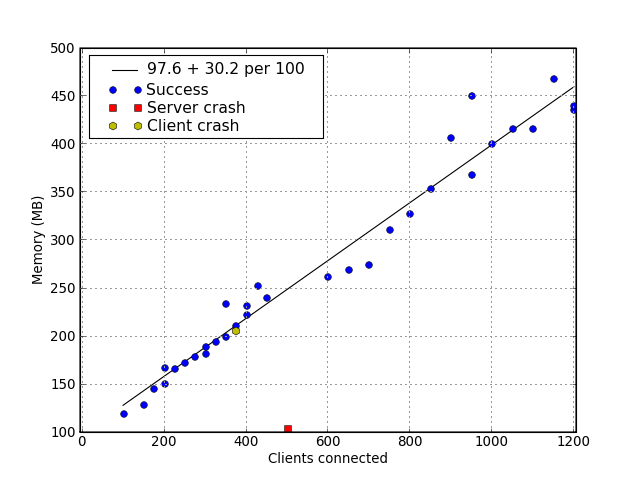

Here is a graph combining the last 2:

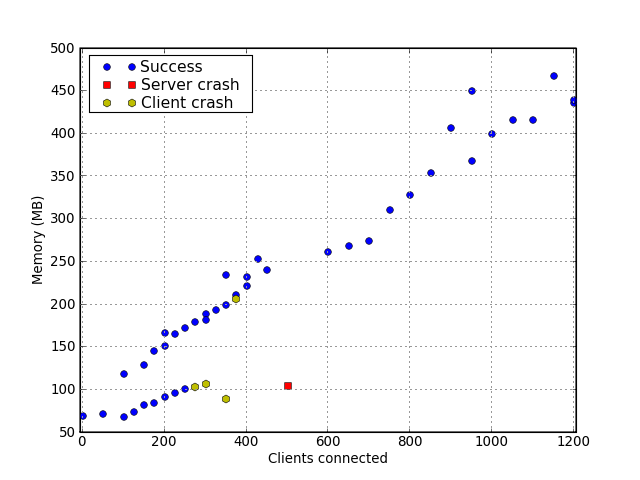

and here is one with them all, without the line (remember the lower set had a quite different number of accounts):

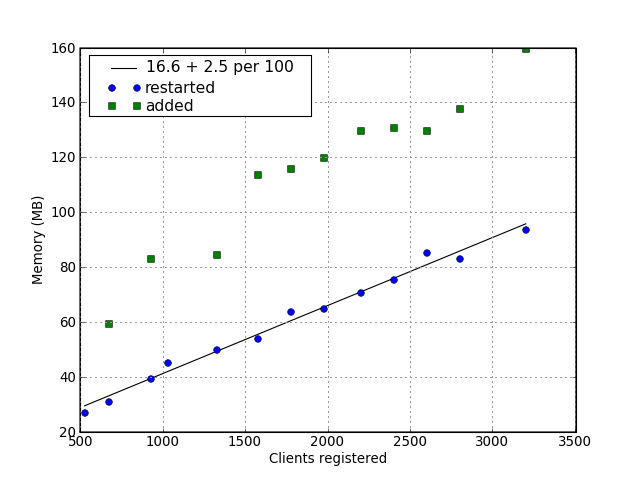

Memory use of inactive users

# Adding users, restarting to find the base load of registered inactive users. # # users after add after restart 523 - 27.2 670 59.5 31.1 924 83.4 39.4 1024 45.4 1324 84.7 50.0 1574 113.9 54.1 1774 116.0 64.1 1974 120.1 65.2 2200 130 71.0 2400 131 75.7 2600 130 85.3 2800 138 83.2 3200 160 93.7

This suggests the memory cost of registered inactive users is also linear, and relatively low at 25MB per thousand.