Japanese

Current status of Japanese localization on XO

At this point, somewhat basic Japanese input & output can be accomplished with addition of Japanese TrueType fonts & Input Methods. It's still crude and you'll need some basic Sugar localization knowledge, so it's not for the faint-hearted. For more information, please see Japanese setup on XO or the current events page for local Japanese volunteers.

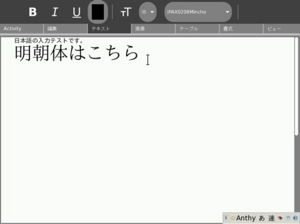

Meanwhile, here's a screenshot of qemu taken by one of the Pootle volunteers.

Some basics on Japanese language

Written Japanese uses four writing systems. The oldest, called Kanji, consists of Chinese Characters imported from China, starting around the 5th century. Quite a few Kanji and new words with Kanji were invented over time in Japan and exported back to China.

Kanji are also called CJK characters, since different subsets in different encodings have been used in Chinese, Japanese, and Korean. Unicode (equivalent to ISO/IEC 10646-1), the Chinese GB18030 standard, and some other character codes try in various ways to include enough characters for all CJK languages.

The second writing system, called "Hiragana", was originally created from simplified handwritten Kanji. While each Kanji character is close to a "root word" in Indo-European languages' sense, Hiragana are used as phonetic characters.

The third is "Katakana", another set of phonetic characters. In standard writing, Katakana are used to write foreign phrases, and often also used to give emphasis, in the manner of italics in English.

Hiragana and Katakana are called "Kana" generically.

Words or names in the Latin alphabet (Roma-ji) are often used in technical documents and reports of foreign news.

Several thousand Kanji, about 50 Hiragana (or 70 if you count the ones with accents), and about the same number of Katakana are used in typical books and newspapers. The 1800 Toyo (everyday use) Kanji are taught systematically in Japanese elementary and secondary schools.

Note that you can write complete text solely in Hiragana or Katakana. Children's books are usually written in Hiragana and the children gradually learn Kanji. Therefore if you specialize in developing educational software, choosing the right character set for screen output is critical as many young-graders probably won't be able read many of Toyo Kanji.

Kanji provides great optimization: the meaning that the characters carry, and the boundary between different characters in a text serve as if some sort of visual cue of word boundary.

Handling of Japanese characters

Japanese pioneered the multi-byte character representation. In the earlier versions of encoding, several thousand characters, including Kanji, Hiragana, Katakana, and other graphical symbols are encoded in two 8-bit bytes. (often in 94×94 = 8836 code space). The first 7-bits are (mostly) compatible with ASCII, except for replacing the backslash with the Yen symbol. The user was thus able to mix almost all of ASCII and the extended characters in plain text. The first multibyte encoding scheme was JIS C 6226-1978. Its characteristics such as the 94×94 format, and the code point allocation of symbols were later used in the early domestic encodings in China and Korea. Its revisions and variations of the encoding are used (perhaps more than Unicode based representation) today. A joint project of the PRC, Japan, and South Korea is creating a unified version of Linux able to handle Chinese, Japanese, and Korean with equal facility, singly or in any combination. It is based on Unicode.

While Unicode's promise that round-trip conversion between the existing domestic encodings and Unicode was false (This is a disputed statement), Unicode is fairly well used to represent Japanese language today. Even with some reluctance, many systems support Unicode and use Unicode as their internal representations. It will be more so in the future.

It is notable that given the history of early adaptation of computing, the free available resource, willingness to make their work public, substantial multilingualization and internationalization work of major open-source software project was contributed by Japanese developers. The examples include large portions of Emacs, several Free Unices, and portions of the X Window System.

Typing Japanese using Input Method programs

There are many methods of keyboard text input for Japanese. The most popular methods use phonetic conversion, pioneered in the Xerox J-STAR workstation in 1981. Users can type phonetically in kana or romaji (Romanization). The input is converted, word by word, or a phrase or sentence at a time, into a meaningful mix of Kanji and Kana. The conversion software may use a grammar analyzer in addition to a dictionary. In cases where the software cannot choose among multiple possibilities, the user chooses the preferred representation from a menu on the screen. Such a software module that converts Kana to a Kanji-Kana mix is called an Input Method or Input Method Editor.

There are many free implementations of such Input Methods. The first one was perhaps "Wnn" in 1985. It was later enhanced to handle Chinese and Korean and has been successfully used on the terminal emulators or text editors like Emacs on Unix/X Window System. Several other such systems such as Canna, SKK, and IIIMF are available. Such systems communicate with the X server first through its own invented protocols and later standardized XIM or XInput protocols. Most current one is probably SCIM. While inputting Japanese text requires additional software modules, the environments has been well supported. The Yudit multilingual Unicode editor, available as Free Software, has its own Kanji conversion methods built in, as does the MULE (Multilingual Emacs) extension to emacs. A Unicode version of emacs has been in development for some time, with substantial Japanese development support.