ColingoXO: Difference between revisions

No edit summary |

No edit summary |

||

| Line 7: | Line 7: | ||

==Purpose== |

==Purpose== |

||

[[Colingo]] is developing an [[activity]] for [[Sugar]] called ColingoXO. ColingoXO creates a platform for [[constructivist]] language-learning by letting children splice video clips to create and share video narratives. Children will be able to use all video clips from the Colingo video library or alternatively record new clips with the XO camera and microphone |

[[Colingo]] is developing an [[activity]] for [[Sugar]] called ColingoXO. ColingoXO creates a platform for [[constructivist]] language-learning by letting children splice video clips to create and share video narratives. Children will be able to use all video clips from the Colingo video library or alternatively record new clips with the XO camera and microphone. |

||

Beyond its role as a simple video editor, ColingoXO will focus on taking advantage of the XO's [http://wiki.laptop.org/go/Mesh mesh] capabilities. Videos will not be housed on individual XO's, but rather be housed and streamed from the [[School server XS]]. Video narratives, which are essentially XSPF playlists, will be kept locally in the [[Journal]] and be easily passed through the mesh due to their lightweight, text-based format. |

Beyond its role as a simple video editor, ColingoXO will focus on taking advantage of the XO's [http://wiki.laptop.org/go/Mesh mesh] capabilities. Videos will not be housed on individual XO's, but rather be housed and streamed from the [[School server XS]]. Video narratives, which are essentially XSPF playlists, will be kept locally in the [[Journal]] and be easily passed through the mesh due to their lightweight, text-based format. |

||

Revision as of 21:10, 12 October 2007

|

Purpose

Colingo is developing an activity for Sugar called ColingoXO. ColingoXO creates a platform for constructivist language-learning by letting children splice video clips to create and share video narratives. Children will be able to use all video clips from the Colingo video library or alternatively record new clips with the XO camera and microphone.

Beyond its role as a simple video editor, ColingoXO will focus on taking advantage of the XO's mesh capabilities. Videos will not be housed on individual XO's, but rather be housed and streamed from the School server XS. Video narratives, which are essentially XSPF playlists, will be kept locally in the Journal and be easily passed through the mesh due to their lightweight, text-based format.

Interface

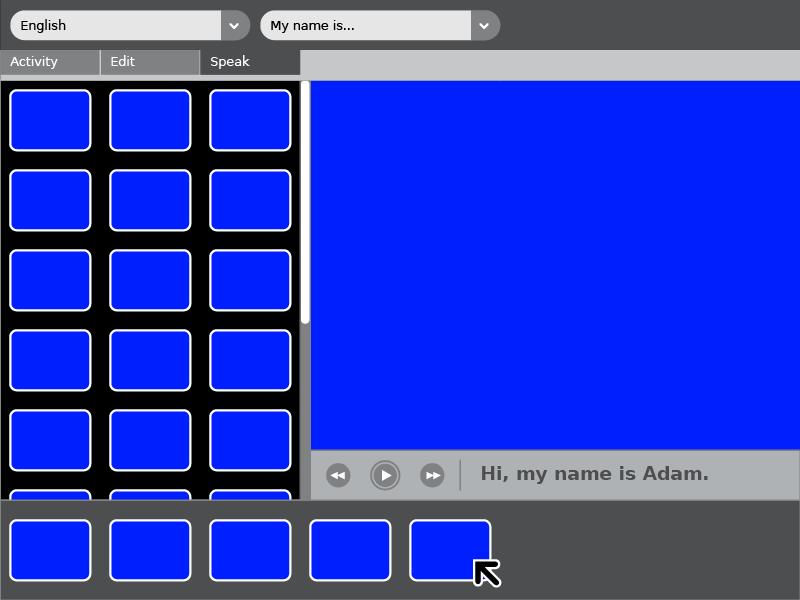

This interface design is by Eben Eliason, a lead UI designer for the OLPC project and the primary author of the OLPC Human Interface Guidelines. Please post comments on the discussion page, or ping lionstone in #olpc-content.

Interface description

The comboboxes at the top allow one to simply switch the language or select a phrase. Typing some words directly into the phrase box will simply filter out the results. The video bank on the left contains all of the videos which match the filter in the toolbar for language and phrase. It scrolls for easy navigation, and holds up to 15 clips before needing to scroll at all.

The timeline is the main interactive component, since it defines the dialogue for the session. It is shown as using a standard (not quite finished) Tray control, which will automatically provide drag'n'drop support, reordering, paging, and assist in supporting networked collaboration on the timeline. This will make it easy for kids to actually create back and forth dialogue with each other, alternately selecting video clips, and in the end having a record of that conversation which they can play back in any language.

The video consumes the rest of the screen, and is a fair bit larger.

Beneath the video there are some basic controls. The play/stop button is obvious. The next and previous buttons will skip to the various video clips in the sequence, like tracks on a CD. Finally, the text beneath the video updates in real time to provide the written form of the phrase being spoken during playback.

Functionality

Developers ought to look at the list of existing XO activities to see what we can reuse code from. We'd like to do this in pygame as much as possible.

ColingoXO will need to be able to read and write XML files, sometimes with embedded video clips and other times streaming compressed video clips from a server.

It will need to pass such information extracted from XML to an embedded video player and recorder capable of streaming, recording, and slicing OGG files. Such a player and recorder must be extremely lightweight due to the challenging resource constraints of the XO. The already-developed Helix media activity provides much of the video player functionality. OLPC wiki also offers help on programming the camera to record with gstreamer.

The ability to drag and drop clips from the clip selector to the timeline will necessitate...anyone?

Sharing Theora (video clips) and XML (dialogs) between XOs on mesh networks will require a networking interface; perhaps code can be extracted from the chat activity.

Likewise, sharing Theora and XML between ColingoXO and colingo.org will necessitate a shared API with the Drupal-based website.

Demo movies

Please take a look at:

All media licensed under a Creative Commons 3.0 Attribution license.

Get involved!

We need your help to create a revolutionary educational tool completely in line with the XO's constructivist educational philosophy. If you are committed the ideals of Free Software and are interested in helping to develop a platform for global language exchange, please say hello in IRC, check out [our dev environment] and learn how to [become a Colingo developer].

Code

Here's what we have so far:

A python script to launch totem with a user-selected playlist fullscreen

We are currently trying to sugarize this, by following the example of kuku. Please ping lionstone or awjrichards in #olpc-content or #colingo on irc.freenode.net if you can help!!

#!/usr/bin/env python

#123gtk.py

#script to load totem with a predefined playlist in fullscreen mode

#by arthur richards 2007-10-05

#released under the GPL

import os, pygtk

pygtk.require('2.0')

import gtk

class OneTwoThree:

def launch_playlist(self, widget, data):

print "launching playlist for " + data

os.system("totem --fullscreen 123/" + data + "/play_all.m3u")

def delete_event(self, widget, event, data=None):

print "delete event occurred, shutting down"

return False

def destroy(self, widget, data=None):

gtk.main_quit()

def __init__(self):

self.window = gtk.Window(gtk.WINDOW_TOPLEVEL)

self.window.connect("delete_event", self.delete_event)

self.window.connect("destroy", self.destroy)

self.window.set_border_width(10)

#define box to stuff buttons into

self.box1 = gtk.VBox(False, 0)

#add the box to the window

self.window.add(self.box1)

self.labelWelcome = gtk.Label("Welcome to ColingoXO!")

self.labelInstruct = gtk.Label("Please select a language to begin your lesson")

self.labelWelcome.set_alignment(0,0)

self.labelInstruct.set_alignment(0,1)

self.box1.pack_start(self.labelWelcome, False, False, 0)

self.box1.pack_start(self.labelInstruct, False, False, 0)

self.labelWelcome.show()

self.labelInstruct.show()

#portuguese

self.button = gtk.Button("Portuguese")

self.button.connect("clicked", self.launch_playlist, "portuguese")

self.box1.pack_start(self.button, True, True, 0)

self.button.show()

#spanish

self.button1 = gtk.Button("Spanish")

self.button1.connect("clicked", self.launch_playlist, "spanish")

self.box1.pack_start(self.button1, True, True, 0)

#english

self.button2 = gtk.Button("English")

self.button2.connect("clicked", self.launch_playlist, "english")

self.box1.pack_start(self.button2, True, True, 0)

self.button.show()

self.button1.show()

self.button2.show()

self.box1.show()

self.window.show()

def main(self):

gtk.main()

if __name__ == "__main__":

lesson = OneTwoThree()

lesson.main()

Our sugarized SVG activity icon

Integrates cleanly into the activity bar and the Home view activity ring.

Integrates cleanly into the activity bar and the Home view activity ring.

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<!-- Created with Inkscape (http://www.inkscape.org/) -->

<!-- by Ben Lowenstein 10-08-07 -->

<!DOCTYPE svg PUBLIC "-//W3C//DTD SVG 1.1//EN" "http://www.w3.org/Graphics/SVG/1.1/DTD/svg11.dtd" [

<!ENTITY fill_color "#FFFFFF">

<!ENTITY stroke_color "#000000">

]>

<svg

xmlns:svg="http://www.w3.org/2000/svg"

xmlns="http://www.w3.org/2000/svg"

version="1.0"

width="55"

height="55"

viewBox="0 0 55 55"

id="svg2160"

xml:space="preserve"><defs

id="defs2183">

</defs><line

style="fill:none;stroke:&stroke_color;;stroke-width:3.38274455;stroke-linecap:round;stroke-linejoin:round;display:inline"

id="line2174"

y2="34.398335"

y1="41.699673"

x2="28.363632"

x1="32.03511"

display="inline" /><path

d="M 80.538794,14.634424 A 3.7837837,5.7432432 0 0 1 82.360937,3.919944"

transform="matrix(4.5103261,1.9208816,0,1.503442,-344.36635,-144.93321)"

style="fill:none;fill-opacity:1;stroke:&stroke_color;;stroke-width:1.99899995;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1"

id="path4147" /><path

d="M 80.538794,14.634424 A 3.7837837,5.7432432 0 0 1 82.360937,3.919944"

transform="matrix(-4.5103261,-1.9208816,0,-1.503442,401.38327,199.09105)"

style="fill:none;fill-opacity:1;stroke:&stroke_color;;stroke-width:1.99899995;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1"

id="path5118" /><line

display="inline"

x1="35.277481"

x2="28.57617"

y1="30.112953"

y2="34.113159"

id="line5120"

style="fill:none;stroke:&stroke_color;;stroke-width:3.38274455;stroke-linecap:round;stroke-linejoin:round;display:inline" /><line

display="inline"

x1="25.064001"

x2="28.735477"

y1="12.559251"

y2="19.86059"

id="line5122"

style="fill:none;stroke:&stroke_color;;stroke-width:3.38274455;stroke-linecap:round;stroke-linejoin:round;display:inline" /><line

style="fill:none;stroke:&stroke_color;;stroke-width:3.38274455;stroke-linecap:round;stroke-linejoin:round;display:inline"

id="line5124"

y2="20.145765"

y1="24.145969"

x2="28.522943"

x1="21.82163"

display="inline" /></svg>

A script for DV capture automagic

This should help OLPC video content creators running linux (only tested on Debian Etch) who have:

- dvgrab

- ffmpeg2theora

- ffmpeg

installed. The shell script will:

- Connect to an attached firewire camera

- Capture all footage on tape and break footage by timestamp

- save all files as oggs (optimized for XO playback), dv, and flv

#!/bin/bash ## Script to take a raw DV file and split it by timestamp ## Robin Walsh 10/2007 echo "Please input the series title. The resulting files will be placed" echo "in a subdirectory by this name." read -e seriestitle; echo "Now please give me a filename prefix for the DV files I'm about to split up." read -e prefix; ## First, let's create the directory structure. mkdir -p $seriestitle/dv $seriestitle/flv $seriestitle/ogg ## Next, lets's grab the DV from the camera and split it by timestamp into AVI files. dvgrab --autosplit --timestamp --format dv2 $seriestitle/dv/$prefix- echo "I can wait here while you check for needed video adjustments." echo "Just hit return when you're ready to convert the grabbed DV into ogg and flv." read -e waiting; ## Here's the for loop that will convert the original AVI files into .flv for f in `ls $seriestitle/dv` do filenameflv=`basename $f .avi` ffmpeg -i $seriestitle/dv/$f -s 320x240 -ar 44100 -r 12 $seriestitle/flv/$filenameflv.flv done ## Here's the for loop that will convert the original AVI files into .ogg for i in `ls $seriestitle/dv` do filenameogg=`basename $i .avi` ffmpeg2theora -x 240 -y 160 -v 5 -a -1 -o $seriestitle/ogg/$filenameogg.ogg $seriestitle/dv/$i done