One encyclopedia per child: Difference between revisions

m (Reverted edits by 209.237.227.133 (Talk) to last version by Leejc) |

(http://en.vikidia.org exists in English) |

||

| (3 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

== Background == |

== Background == |

||

The purpose of this page is to encourage the quick generation of good content for initially populating the One Laptop Per Child. |

The purpose of this page is to encourage the quick generation of good content for initially populating the One Laptop Per Child. |

||

Many scholars are self taught primarily from their early exposure to an encyclopedia at an early age. The encyclopedia gave them a good start in life. How much more useful will a |

Many scholars are self taught primarily from their early exposure to an encyclopedia at an early age. The encyclopedia gave them a good start in life. How much more useful will a hyper-linked encyclopedia be to the home of a child in a developing country? |

||

== Wikikids == |

|||

Is for developing content on a specific wiki that has its own life, and to let both children, teenagers and adults contribute on building this encyclopedia. It was proposed [http://meta.wikimedia.org/wiki/Wikikids here] and later made in Dutch (15000+ articles) French (16000+ articles) and Spanish (3500+ articles), Italian, Russian and English (900+ articles): http://wikikids.wiki.kennisnet.nl/ - http://www.vikidia.org/ - http://fr.vikidia.org/ ; http://es.vikidia.org and http://en.vikidia.org |

|||

See also [[Wikis for children]]. |

|||

== Current scripts == |

== Current scripts == |

||

We have a set of |

We have a set of Perl scripts that takes Wikipedia snapshots and downloads; drawing from a parent page |

||

with a list of articles (at en.wikipedia.org/wiki/User:Sj/wp). |

with a list of articles (at en.wikipedia.org/wiki/User:Sj/wp). |

||

There are some existing |

There are some existing Perl scripts that take in that wiki page and output multilingual static HTML, attending to |

||

* one list of keywords (in one language) |

* one list of keywords (in one language) |

||

* a list of languages to check -- following any interwiki links from the wiki pages defined in the first list |

* a list of languages to check -- following any interwiki links from the wiki pages defined in the first list |

||

* removing a set of unwanted templates, nav templates, and header/footer information by elements of the |

* removing a set of unwanted templates, nav templates, and header/footer information by elements of the HTML |

||

* leaving in or removing external links (toggled by an option in code) |

* leaving in or removing external links (toggled by an option in code) |

||

* making all links to images/pages local; removing any that are dead -- e.g., wikilinks that point to articles not included in the snapshot [or perhaps these should link out to the web?] |

* making all links to images/pages local; removing any that are dead -- e.g., wikilinks that point to articles not included in the snapshot [or perhaps these should link out to the web?] |

||

* adding a new overall skin/style (with |

* adding a new overall skin/style (with OLPC icon, etc.) |

||

The scripts could use some cleaning up (to run off of |

The scripts could use some cleaning up (to run off of XML exports |

||

rather than |

rather than HTML, perhaps using InstaView to display the result; |

||

to handle languages and other options cleanly: external links or no, |

to handle languages and other options cleanly: external links or no, |

||

images or no) and could use porting to python (so that one could run that |

images or no) and could use porting to python (so that one could run that |

||

kind of snapshot script from an |

kind of snapshot script from an XO, which doesn't natively have Perl). |

||

Related snapshot scripts should take in |

Related snapshot scripts should take in |

||

* a set of keywords, piped through |

* a set of keywords, piped through Wikiosity, to make a 10-article reading list |

||

* a set of categories, which exports articles in that category and works from there (see [[Special:Export]]) |

* a set of categories, which exports articles in that category and works from there (see [[Special:Export]]) |

||

See: [http://en.wikipedia.org/wiki/User:Pilaf/InstaView |

See: [http://en.wikipedia.org/wiki/User:Pilaf/InstaView InstaView] | [http://dev.laptop.org/git?p=projects/wikiosity;a=summary Wikiosity] | [http://gearswiki.theidea.net GearsWiki] (requires Google gears, uses InstaView for rendering, imports MediaWiki XML dumps) |

||

== Viewpoint taken == |

== Viewpoint taken == |

||

A bottom-up approach is to content development is to decide the appropriate entries (headings) and to find suitable articles for inclusion. A top-down approach would |

A bottom-up approach is to content development is to decide the appropriate entries (headings) and to find suitable articles for inclusion. A top-down approach would categorize all of the suitable and desirable knowledge into a tree of knowledge, Dewey or Library of Congress style. We believe that the bottom-up approach is more pragmatic, and that a top-down approach can follow. |

||

== Contribution == |

== Contribution == |

||

| Line 35: | Line 40: | ||

== Limitations == |

== Limitations == |

||

This article is only concerned with the English |

This article is only concerned with the English content. The English version is the prototype. Spanish and other languages can certainly follow suit. |

||

Updates are possible at a school linked to the Web. Also the OEPC can be flushed out of the flash memory when the memory is required for other purposes, and reloaded at a school connected to the Web. |

Updates are possible at a school linked to the Web. Also the OEPC can be flushed out of the flash memory when the memory is required for other purposes, and reloaded at a school connected to the Web. |

||

| Line 41: | Line 46: | ||

Volunteers are required to extend the prototype into a full OEPC, ready for downloading to the OLPC. |

Volunteers are required to extend the prototype into a full OEPC, ready for downloading to the OLPC. |

||

Note that SOS |

Note that SOS children's villages have manually selected and cleaned up [http://www.soschildrensvillages.org.uk/charity-news/education-cd.htm 4000 pages and 8000 images from Wikipedia] for 8-15 year old children to fit on a CD. This will not fit on one OLPC. |

||

Once the distributed text base is working, the advanced readers will be able to pick up the SOS text that they do not have on their own laptops, from their |

Once the distributed text base is working, the advanced readers will be able to pick up the SOS text that they do not have on their own laptops, from their neighbors. |

||

== The index page == |

== The index page == |

||

| Line 52: | Line 57: | ||

== Links (on various wikis)== |

== Links (on various wikis)== |

||

*'''Prototype: http://es.vikidia.org/wiki/Vikidia:Portada''' - http://fr.vikidia.org/ |

|||

*'''[[Wikis for children]]''' |

|||

*'''Hardware:''' Laptop [[Hardware specification]] |

*'''Hardware:''' Laptop [[Hardware specification]] |

||

*'''Software task list:''' [[OLPC software task list]] |

*'''Software task list:''' [[OLPC software task list]] |

||

*[http://en.wikipedia.org/wiki/Wikipedia:One_Encyclopedia_Per_Child OEPC prototype] |

*[http://en.wikipedia.org/wiki/Wikipedia:One_Encyclopedia_Per_Child OEPC prototype] |

||

*[http://wiki.laptop.org/go/Wikipedia#Wikipedia_on_DVD English Wikipedia on DVD] (2000 selected articles) |

*[http://wiki.laptop.org/go/Wikipedia#Wikipedia_on_DVD English Wikipedia on DVD] (2000 selected articles) |

||

*'''The Wikikids idea:''' [http://meta.wikimedia.org/wiki/Wikikids Wikikids]. |

|||

[[Category:Learning]] |

[[Category:Learning]] |

||

Latest revision as of 20:18, 1 June 2014

Background

The purpose of this page is to encourage the quick generation of good content for initially populating the One Laptop Per Child. Many scholars are self taught primarily from their early exposure to an encyclopedia at an early age. The encyclopedia gave them a good start in life. How much more useful will a hyper-linked encyclopedia be to the home of a child in a developing country?

Wikikids

Is for developing content on a specific wiki that has its own life, and to let both children, teenagers and adults contribute on building this encyclopedia. It was proposed here and later made in Dutch (15000+ articles) French (16000+ articles) and Spanish (3500+ articles), Italian, Russian and English (900+ articles): http://wikikids.wiki.kennisnet.nl/ - http://www.vikidia.org/ - http://fr.vikidia.org/ ; http://es.vikidia.org and http://en.vikidia.org

See also Wikis for children.

Current scripts

We have a set of Perl scripts that takes Wikipedia snapshots and downloads; drawing from a parent page with a list of articles (at en.wikipedia.org/wiki/User:Sj/wp).

There are some existing Perl scripts that take in that wiki page and output multilingual static HTML, attending to

- one list of keywords (in one language)

- a list of languages to check -- following any interwiki links from the wiki pages defined in the first list

- removing a set of unwanted templates, nav templates, and header/footer information by elements of the HTML

- leaving in or removing external links (toggled by an option in code)

- making all links to images/pages local; removing any that are dead -- e.g., wikilinks that point to articles not included in the snapshot [or perhaps these should link out to the web?]

- adding a new overall skin/style (with OLPC icon, etc.)

The scripts could use some cleaning up (to run off of XML exports rather than HTML, perhaps using InstaView to display the result; to handle languages and other options cleanly: external links or no, images or no) and could use porting to python (so that one could run that kind of snapshot script from an XO, which doesn't natively have Perl).

Related snapshot scripts should take in

- a set of keywords, piped through Wikiosity, to make a 10-article reading list

- a set of categories, which exports articles in that category and works from there (see Special:Export)

See: InstaView | Wikiosity | GearsWiki (requires Google gears, uses InstaView for rendering, imports MediaWiki XML dumps)

Viewpoint taken

A bottom-up approach is to content development is to decide the appropriate entries (headings) and to find suitable articles for inclusion. A top-down approach would categorize all of the suitable and desirable knowledge into a tree of knowledge, Dewey or Library of Congress style. We believe that the bottom-up approach is more pragmatic, and that a top-down approach can follow.

Contribution

By merging the entry headings from suitable printed encyclopedias, and omitting obsolete or non neutral-point-of-view entries, the alphabetical index to the OEPC is quickly created. Then using these entry headings to pull in articles from the Simple English Wikipedia, the OEPC is rapidly built. When no suitable entry exists in the Simple English version, the article is retrieved instead from the Full English Wikipedia, and marked in bold to indicate that it is advanced material.

Limitations

This article is only concerned with the English content. The English version is the prototype. Spanish and other languages can certainly follow suit. Updates are possible at a school linked to the Web. Also the OEPC can be flushed out of the flash memory when the memory is required for other purposes, and reloaded at a school connected to the Web.

Advances

Volunteers are required to extend the prototype into a full OEPC, ready for downloading to the OLPC.

Note that SOS children's villages have manually selected and cleaned up 4000 pages and 8000 images from Wikipedia for 8-15 year old children to fit on a CD. This will not fit on one OLPC. Once the distributed text base is working, the advanced readers will be able to pick up the SOS text that they do not have on their own laptops, from their neighbors.

The index page

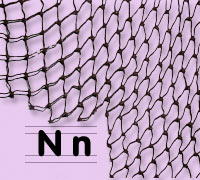

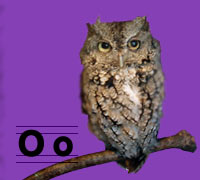

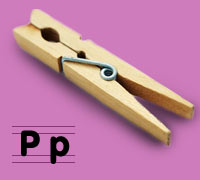

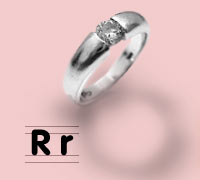

This is a showcase for the prototype main page of the One Encyclopedia Per Child. The full size images appear below. However in the final format, they will appear in a 6 by 5 matrix, with the four corners left white. When the child clicks on an image it will take the child to the appropriate volume of the encyclopedia.

This page is placed here on this wiki to elicit suggestions for improvement from the community. Please contribute your thoughts by clicking on the discussion tab.

Links (on various wikis)

- Prototype: http://es.vikidia.org/wiki/Vikidia:Portada - http://fr.vikidia.org/

- Wikis for children

- Hardware: Laptop Hardware specification

- Software task list: OLPC software task list

- OEPC prototype

- English Wikipedia on DVD (2000 selected articles)

- The Wikikids idea: Wikikids.