XS Server Services: Difference between revisions

No edit summary |

m (→Network Router) |

||

| (66 intermediate revisions by 23 users not shown) | |||

| Line 1: | Line 1: | ||

<noinclude>{{OLPC}}{{Translations}}</noinclude> |

|||

These are services that the [[School server]] will provide. Additional services under consideration for deployment are listed [[XS Server Discussion|separately]]. |

|||

[[Category:Software]] |

|||

[[Category:Developers]] |

|||

[[Category:SchoolServer]] |

|||

These are services that the [[School server]] will provide. Additional services under consideration for deployment are listed [[XS Server Discussion|separately]]. Service meta issues such as installation and management are discussed on [[XS Server Specification| School server specifics]]. A [[XS_Service_Description|detailed description]] of the current implementation of these services is also available, along with [[Schoolserver_Testing|Test Instructions]]. |

|||

''Please help by adding links to existing pages discussing these topics, if you are aware of them'' |

''Please help by adding links to existing pages discussing these topics, if you are aware of them'' |

||

| Line 5: | Line 10: | ||

=Library= |

=Library= |

||

The school library provides media content for the students and teachers using a [[School server]]. This content may either be accessed directly from the school library or downloaded onto the laptops. The content in the school library comes from a variety of sources: OLPC, [[OEPC]], schoolbooks and interesting articles from all countries but most prominently the own country, the regional school organization, other schools, and teachers and students in the school. It includes both software updates and repair manuals for the laptop which should ideally be copies from http://wiki.laptop.org/. |

|||

Are any books (digital documents) downloaded to the laptops, or is most content |

|||

accessed from the school server? |

|||

A number of library attributes (self-management, scalability, performance, identity) are discussed in their own sections below. But it is clear that the library is not a single service, but rather a set of services (wiki or wiki+,http cache, bittorrent client, codecs, etc...) which combine to provide the Library functionality. |

|||

==Caching== |

|||

The library at its core is a "local copy" of a library assembled by the school's country and region, with help from OLPC. This set of documents is assembled on a country/region server, and is named a Regional Library. Each [[School server]] has a local library consisting of documents submitted by students and teachers using that server. This is named a Classroom Library. The collection of Classroom Libraries provided by the School servers at a particular school (there will typically be 2 to 15) is named a School Library. |

|||

How much of the library is local and how much is simply a cache of a larger regional/country library ? Can a transparent HTTP cache applied to a centralized library server provide the |

|||

desired user experience (quick access to common books and access to a large catalog of books)? |

|||

==Discovery== |

|||

==Traditional Media== |

|||

Basic bandwidth assumptions: there will be sporadic connections between XOs via the mesh, fast connections between XOs and school servers [SSs], possible sporadic connections b/t SSs and the internet at large via regional servers [RSs], and generally fast connections (at least fast downloads) b/t RSs and the internet. |

|||

What is mechanism for user submissions of traditional (static) media to the library? The centralized library will probably have a human-filtered request mechanism. What about the local library? Will teachers (and students) have a place to put resources they create where |

|||

all can retrieve? |

|||

Notion: XOs can maintain an index of materials seen on local XOs, school and regional servers; and can send requests for material over the mesh or network. School servers can maintain a larger similar index, collating requests for materials and evaluating whether they are likely to be fulfillable over the mesh. Those that aren't can be queued for request from regional servers or the internet. |

|||

==Collaborative and Dynamic Media== |

|||

: Indices would include a variety of useful metadata, including size, location, latency/availability (available via Torrent, FTP availability, estimated speed of connection), and rough content-based IDs. |

|||

: Servers and perhaps XOs would maintain basic statistics on what materials and types were most requested, with some special attention to requests for large files (which are harder to fulfill without saturating the network), and when nearby queues and indexes have been updated. |

|||

A reasonably accurate method is needed for keeping these indices mutually up to date, sending feedback back to requestors ("this material is 9GB in size; the request is unlikely to be fulfilled. please contact your network maintainer for more information."), and sending feedback to content hosts ("this file is very frequently requested. please seed a torrent network with it. <links>"). |

|||

What about local wikis? Are they supported on the School server? To some extent, a Wiki may be used as the means for uploading and cataloging static media in a school library. |

|||

On the "internet at large" side of indexing are national and global libraries, metadata repositories such as the [http://www.oclc.org/default.htm OCLC], global content repositories such as Internet Archive mirrors, and a constellation of web pages organized by various search-engine indexes [cf. open search, Google]. There is fast connection among these groups, and various categorization, discussion, and transformation of material (for instance, to compressed or low-bandwidth formats) can be done there in preparation for sending it back to the less swiftly-connected parts of the network. <small>(For instance, one often scans high-bitrate multimedia on a local networks at much lower bitrates, edits the low-bitrate material, and sends the collected edits back to be applied to the original media high-power machines and over a high-bandwidth network.)</small> |

|||

==Scalability== |

|||

A pre-determined subset of the Regional Library is mirrored in the School Library, the remainder of the documents are available over the Internet connection. |

|||

Caching of recently accessed documents will be done to provide the best user experience given limited connectivity, but it also has the effect of minimizing the load on the regional library. This will be performed by the [[#HTTP_Caching|transparent HTTP proxy]]. |

|||

While a peer-to-peer protocol may be used to help scale the Regional library, it must be sensitive to the fact that a large number of schools will have very limited upstream bandwidth and would not participate as peers. And while this protocol may serve well for mirroring content onto the school servers, using it for student accesses to the library would require a more complex client than a browser (see [[XS_Server_P2P_Cache|P2P Caching]]). |

|||

At the school level, there is some possibility of taking advantage of local connectivity to distribute the document cache among all servers in the school. Unfortunately, the exact piece of software we need hasn't been developed yet (see [[XS_Server_P2P_Cache|P2P Caching]]). |

|||

Good places to get ideas from are Andrew Tanenbaum's Distributed Operating System Amoeba with its transparent and performant Bullet File Server which could be expanded to a growing global distributed RAID file system that will never run out of storage using something like the ATA over Ethernet protocol or GoogleFS or possibly better. |

|||

==Self-management== |

|||

What is the mechanism for user submissions of media to the library? Teachers (and students) should certainly have a place to put resources they create where all can retrieve. |

|||

What about local wikis? Should they be supported on the School server? |

|||

They are probably best replaced with a more function oriented information organization tool |

|||

such Rhaptos or Moodle, and an online extension of the journal which supplants blogging. |

|||

===Repository=== |

|||

Content centered, but allowing collaboration in building new content. See http://cnx.org for an example. The service is provided as open source software by the name [http://rhaptos.org/ Rhaptos]. It's Python based. |

|||

===Collaborative/Publishing/Learning Management System=== |

|||

Moodle could be an option? http://moodle.org |

|||

:Exchanging published work with other communities could be done using a USB key, taking a class ''portfolio'' (using moodle pages) to another village. Distance collaboration can also be done in an asynchronous way, where each school has its page or set of pages in Moodle, and it would work like standard mail, travelling by land to another school, kids contributing, commenting, peer-assessing, and then back again.--Anonymous contributor |

|||

===A Mini-Moodle Plan=== |

|||

:These draft notes from Martin Langhoff, [http://moodle.org/ Moodle] developer (14/03/2007): we are keen on helping prepare / package / customise Moodle for deployment in this environment, and have experience with Debian/RPM/portage packaging and auth/authz/sso. Actually, I lurk in laptop-dev and sugar-dev keen on discussing this further. Right now reading up on Bitfrost. |

|||

There's been some private correspondance between Moodle devs (MartinD, MartinL) discussing an overview of what the main work areas for Moodle on XS would be: |

|||

* Packaging (RPM?) |

|||

* Provide an out-of-the-box config that Just Works |

|||

* Integration with auth / sso (Bitfrost) |

|||

* Integration with group / course management (Is this too structured ;-) ?) |

|||

* Replace mod/chat and messaging infrastructure with mod/chat-olpc that hooks into Sugar chat/IM |

|||

* (maybe) Replace mailouts with OLPC infrastructure for email |

|||

* Work on an XO theme and UI revamp |

|||

(MartinL is happy to help on all except the theme/UI, due to sheer lack of talent) |

|||

That's the basics of getting a basic integration in place. The fun work will be in creating activity modules that make sense in a XO environment. |

|||

: Maybe this should be moved to a subpage? |

|||

* just thought I'd let you guys know that there is a project run by the OU (UK) to get an Offline version of Moodle developed. It might be something that you guys could use and contribute to as it sounds like our purpose overlaps with yours. You can find out more at http://hawk.aos.ecu.edu/moodle/, just sign up to view the course, and lets see how we can make the two projects help each other. In particular from the list above we are focussing on providing options 2,3 and 4 so our work may provide a reference for yourselves. Colin Chambers 13:50 03/12/2007 |

|||

==Bandwidth Amplification of Local Content== |

|||

This refers to the problem of supplying content posted to a School Library to a large number of other schools. This is a problem due to the limited bandwidth available for uplink from a typical school. Unfortunately, the architecture most useful for nonprofit content distribution --- peer-to-peer (P2P)--- is not well suited for use by school servers using highly asymmetrical (DSL, satellite) network connections. |

|||

One solution is a well-connected server with a large amount of storage provided at the regional or country level which will mirror the unique content from each school. This has the dual purpose of backing up said content. P2P access protocols may be used to reduce the load on these (seed) servers making use of any regional schools with good uplink connectivity. |

|||

: Here are some thought on solutions and challenges in this area. Make sure that the upstream bandwidth is fully utilized all the time. That will require some prioritization so that active user traffic (e.g. web browser requesting a page) goes first. After all "real time" user traffic is sent upstream, the XS can use available bandwidth to push uploads of other material. The basic idea is that schools upload large files overnight and the interactive traffic generated directly by XOs goes ASAP. This may require space on the XS where files going upstream can be stored while awaiting upload. It also needs support for file transfers to start, stop, and then resume where they left off. |

|||

: The second basic point is to compress before uploading. There's a trade off between CPU utilization vs. BW utilization (i.e. compressing every packet can bog down the XS CPU) so it probably make sense to only compress large non-real time uploads. |

|||

: The core challenge may be how to implement this without breaking common applications. For example, you want users to upload content to any HTTP based app (wiki, BLOG etc.) and those may not be hosted on the school network so you have no control over them. Therefore, they may not support a start, stop and resume upload paradigm. One idea is to intercept all HTTP Post messages on the XS. Those over a certain size get passed to a regional server. Once the regional server recieves the full post message with its data, the regional server re-initiates the HTTP Post and rapidly completes the transaction. That will require some kind of caching or spoofing code on the regional server. |

|||

: That complexity could be avoided by constraining large uploads to school system hosted servers. However, it may still require re-write of the hosting applications. e.g. uploads to a school hosted wiki can be intercepted by the XS then the XS does a remote copy (rcp) or FTP upload or whatever to the right directory on the wiki and the content is available to everyone. That requires a wiki which allows posting of content by just placing it in the right directory. May not be trivial but that could be a much easier problem to solve then trying to spoof HTTP Posts. |

|||

: I hope that's not too general a comment for this page and I hope it makes sense. In short, if you only have a small amount of upstream bandwidth, use what you have all the time and make every bit count. |

|||

--[[User:Gregorio|Gregorio]] 16:04, 7 December 2007 (EST) |

|||

==Security and Identity== |

|||

The Library uses the identity convention introduced by [[Bitfrost]]. When a laptop is activated, it is associated in some way (TBD) with a school server. This is the School server hosting the Classroom Library that a student is allowed to publish onto. Students not associated with a Classroom Library only have read permissions on the Library. |

|||

=Backup= |

=Backup= |

||

According to [[OLPC_Human_Interface_Guidelines/The_Laptop_Experience/The_Journal|this description of the Journal]], it will provide automatic backup to the [[School server]], with a variety of restore options. |

|||

What are the plans for providing additional storage to users of the XO laptops? |

|||

Is this a backup (sync) or just storage for content `overflowed' from the laptops ? |

|||

What are the plans for providing additional storage to users of the XO laptops? How does the Journal handle filling up the available storage on the XO? allocated storage on the School server? |

|||

Should the school provide automatic backup of the laptop contents ? |

|||

=Network Router= |

|||

The [[School server]] is first and foremost a node in the [[wireless mesh]] which provides connectivity to the larger internet. |

|||

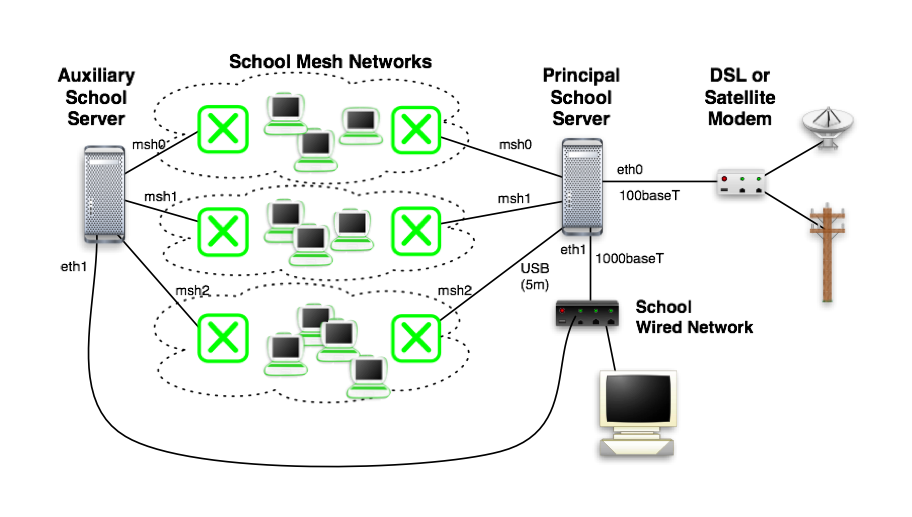

[[Image:XS_Usage_Dual.png]] |

|||

A starting assumption is that it is largely a transparent [[router]]. It does not perform any network address translation, and very little packet filtering. It will perform [[bandwidth shaping]] to ensure fair access to the internet. |

|||

:::<i>Note: March 2, 2008. |

|||

:::In Peru it will be imposible that the School Server behaves as a transparent router, because: |

|||

:::a) The most search word in Peru is sex (I have develop Internet commercial networks and ISPs in the middle of the 90's. My personal findings are the same that other studies have shown). There is law in Peru that put responsability for seeing pornography in the shoulders of the owner or administrator of the Internet Cafe. For law purposes all the groups of XOs + the School Server + the teacher in charge of the whole system will be considered as an "Internet Cafe". Some kind of "porno" filtering must be provided. Penalties for the responsibles are jail, every "internet cafe" in Peru must provide a "safe" zone for children (special computers with strong filtering). That is the law. |

|||

:::b) There are enough IP numebers in the IPv4. But for Peru getting the IP numbers is a cumbersome task. 1st hands experience: not impossible but it is something that the big telecoms have absorved a long time ago. American authorities in charge of assigning IP blocks tell us: "ask your telecoms, they have a ton of IP numbers that are not in use". Normally Telecomos (THE telecom) here in Peru has not been happy with Internet access that doesnt use their infraestructure (they will not provide the VSATS... so they will no have interest in helping with IPs for a "foreign" network... they will tell us to get IP numbers from the [[VSAT]] provider). |

|||

:::c) Public IPs are exposed to Internet Attacks (like DOS, Denial Of Service). |

|||

:::d) It will be mandatory to "block" some protocols (to avoid innecesary use of the bandwidth that will turn the project as a short life idea), like the special video protocols (no bandwith/money can support 100 XO computers asking different videos). In extreme cases a cache system or a filtering of the http protocolo can be establish to avoid big images (totally posssible) or downloading movies (possible) or seeing sites that use flash (nothing against flash, just an example)... or to avoid any other content that is "heavy". In old internet times we were able to download a big file... but it arrive to us "chunked", we have to "rebuild" the big file in our computers. Since there will not be enough bandwidth in Peru we must analyze the issue and present solutions to these and similar situations. |

|||

:::In my opinion, there is need for a [[NAT]] (Network Address Translation) to avoid some of this problems. At the same time the School Server can provide "caching" service to make an efficient use of the always scarce internet bandwidth that the schools will get. |

|||

:::All this goes in opposite sense to what we can think we want for the children: freedom of choice, direct access to the Internet world (each XO with its own public IP... and the child able to mount an Internet server if he/she wants (and knows how to do it... they will know for sure!). So the final question is how much liberty are we going to give to the end user (kid/young people) in charge of his/her XO ? |

|||

::: Javier Rodriguez. Lima, Peru. info@olpc-peru.info --[[User:Peru|Javier Rodriguez. Lima, Peru]]</i> |

|||

::DSL, and sattelite, and UMTS and GPRS for that matter, are scarce commodities that you still have to pay royalties for as there are monopolists. The bandwidth could be greatly expanded if we managed to produce cheap waterproof datawashlines: thin copper telephone cords containing low voltage power and wireless and USB ethernet repeaters every 100 meters or however much it takes. These lines could be cheaply and quickly rolled out along train tracks, subway tunnels, thru lakes, rivers and oceans, alongside roads and paths, thru forests to the most remote of villages or cabins or points of sight. The routers would be self-learning to automatically connect every device's ethernet adress to all others over a most often redundant number of routes. |

|||

::: <i>For Peru: All networking to reach the main Internet must be done using wireless or ANY other alternative method, it is not possible to use cooper or any other "land" fiber. If the XO computers are installed in the poorest part of the country (over the 4,000 meters or 12,000 feet altitude) then you can drive a car by 3 hours and you will not find another community or town... and you can drive a car by half a day to reach the nearest bigger town that have regular electricity and some kind of Internet connection (normally by a Internet Cafe o "cabina internet"). By the way, this "Internet Cafe" that we will find as the nearest place were there is Internet will have computers with Windows systems. Finding one with Linux will be a huge task. Nope, they will not install any kind of Linux on their computers (it has been tried before at national level, and all the peruvian linux users know that it is not an easy task to teach/show/pass the voice about the nice features of Linux systems). Finally we, OLPC, are an educative project not a computer project so we are not attached to any hardware or software (or we are?). Distances are huge: no cooper lines can be used... wireless are a better option... radio (old radio short frecuency) should be studied... and "mule" systems must be evaluated (we are studying the issue here). --[[User:Peru|Javier Rodriguez. Lima, Peru]]</i> |

|||

:Providing IPv6 connectivity from the mesh to the internet will require the server to provide a tunnel over IPv4 to the closest IPv6 network, since most sites will not be providing direct IPv6 connectivity. --[[User:CScott|cscott]] |

|||

::IPv4 is doomed to be completely replaced long before the 32-bit Unix clock turnaround in 2036 and we should not worry about it too much but create incentives to upgrade to IPv6 and support IPv4 only sparingly. |

|||

==HTTP Caching== |

|||

The only packet filtering proposed is a transparent proxy on port 80, which will allow a |

|||

caching of commonly accessed HTTP (web) content to occur locally. This will reduce the load |

|||

on the internet connection, as well as the response time seen by a user. This may be relied |

|||

upon to implement the [[#Library|School library]]. |

|||

:Again, due to IPv6/IPv4 interoperability issues, the proxy can't be transparent, unless we're willing to do [[NAT]] for the laptops to give them a routable IPv4 address. --[[User:CScott|cscott]] |

|||

The problem here is availability of HTTP caching software. The most popular open source provider, [http://www.squid-cache.org squid], has IPv4 and IPv6 support from version 3.1, and can be built without many of the additional features where not needed. |

|||

:There are other HTTP caches, that are less overengineered and easier to configure than Squid, most prominently Roxen, Apache and wwwoffle, a new slim one would be easy to write in perl or possibly python. |

|||

A more intelligent caching/redirection scheme may be provided by [http://codeen.cs.princeton.edu/ CoDeeN]. Unfortunately, it is built on top of commercial proxy software. |

|||

And a third option, (actually potentially related to CoDeeN), is [http://www.cs.princeton.edu/~vivek Vivek Pai] and [http://www.cs.princeton.edu/~abadam Anirudh Badam] at [http://www.cs.princeton.edu Princeton] have done some work on a light weight caching proxy (HashCache) that may become suitable for OLPC at some point in the future. |

|||

::<i> "Caching service" can be install with a different aproach: not a "big" server that will do the caching. ALL the XOs must do some caching and every XO must "talk" with their neighbors (other XOs) to see if they have the information in their memories (USBs or Flash or any other kind of memory that they have in that moment install). This colaborative aproach between the XOs can be so usefull that there is a posibility that ALL the local network becomes the BIG REPOSITORY of the information. It is a matter of design and returning to the principles of the Internet: we are all pairs, we are not end users of no one, we are pairs. We are "cells" of the big brain but at the same time WE ARE the big brain --[[User:Peru|Javier Rodriguez. Lima, Peru]]</i> |

|||

See also [[Server Caching]] |

|||

==Name Resolution and Service Discovery== |

|||

The [[School server]] will use and support the use of [http://en.wikipedia.org/wiki/Zeroconf Zeroconf] techniques for device name declaration and service discovery. |

|||

It should also support traditional DNS by exporting the mDNS name registry. |

|||

:mDNS has some scaling issues which do not (yet) seem to be resolved. In the IPv6 world, we'll try to use Router Advertisement and well-known IPv6 anycast addresses to provide services without the need for explicit discovery protocols. --[[User:CScott|cscott]] |

|||

:Also, there may be some issues with trying to support a domain in both traditional unicast DNS and mDNS. While some of the following criticisms by the Avahi team of [http://avahi.org/wiki/AvahiAndUnicastDotLocal sharing a domain between DNS and mDNS] could potentially be mitigated by a programatic interface and the use of a distinct domain name, others (notably the critique about leaking link-local information) should give pause to anybody considering this. --[[User:Dupuy|@alex]] 23:39, 9 February 2008 (EST) |

|||

::''Please remember, that we do not recommend using nss-mdns in this way. Why? Firstly, because the conflict resolution protocol of mDNS becomes ineffective. Secondly, because due to the "merging" of these zones, DNS RRs might point to wrong other RRs. Thirdly, this can become a security issue, because information about the mDNS domain .local which is intended to be link-local might leak into the Internet. Fourthly, when you mistype host names from .local the long mDNS timeout will always occur. Fifthly it creates more traffic than necessary. And finally it is really ugly.'' |

|||

==Bandwidth Fairness== |

|||

The [[School server]] should implement a bandwidth fairness algorithm which prevents a single user from dominating the use of the internet connection. The problem is that the short-term fairness algorithms used by TCP give P2P software equal consideration as users trying to access a document over the web. By biasing the queueing algorithm based on usage over the past 4 to 24 hours, we can protect the random browser from the heavy downloader. |

|||

The problem is that due to our mesh network, we may really be penalizing a remote classroom relayed through a single node. |

|||

We could bias the bandwidth allocation based on remote port (e.g. favoring web access to port 80), but this solution seems less than optimal. |

|||

:: <i>Proposal: Every XO (every Fedora/Sugar installation) must have a very small program, this program (named TinyProxy or SelfProxy) has only one purpose: keep accounting of how many megabytes of information the user has request from the Internet. Every teacher (in his XO computer) will get (automatically) the information coming from the 20,30 or 100 TinySelfProxies (installed) on each computer. According to the kind of task, the use of the Internet that has been done, the available bandwidth (or any other method that they use to reach the Internet), the teacher can assign more "rights" (like money... every kid get "start money"... "time is money" you remember this saying...)... kid that has spend "his money" (slash time periods or "downloaded" kilobytes) will have to borrow "time" from a friend or think better next time when he receive his/her assigned "time". Programming this small and innocuous (?... the teacher will be the "big brother", eh?)... small program can be done in any language that support TCPIP protocols. Since the boys (and girls) will have root access they will be able to replace and modify their "tiny - self proxy" programs... but the teacher will FEED new ones... the database (small text record) that have the story of the use of each kid... is located in every XO... and it is encrypted so any modification will be an alarm for the teacher that will know that something is happening (an error on the system or a "wise" kid!). --[[User:Peru|Javier Rodriguez. Lima, Peru]]</i> |

|||

==Dynamic IP Address Assignment== |

|||

The school server will take responsibility for assigning IP network addresses to device on subnets it is routing. |

|||

: <i>Strong opinion here: It is totally possible that a network of XOs work isolated (normally they will do it in "isolation" mode... because of huge distance... and huge cost of the Internet access... in Peru that will be the normal scenery). I think that every local network must work with a private address (192.168.*.*) and all of them will "look" for a 192.168.1.1 (reserved address for the "School server" or any other way to reach the Internet). The "School Server" will have this "private networks" addres and at the same time (by DHCP) or fixed addressing will have a "public" internet address to connect to the Internet (this last issue depends on the answer to this question: |

|||

:How the "School Server" will have access to the Internet? |

|||

:* By one local (national) provider? |

|||

:* Connecting to the OLPC network by VSAT (satelite) connection |

|||

:* By "mule" systems (storing TCP/IP requests and traveling to the nearest "internet connected" point |

|||

:* 2 o 3 other ways to reach the net. |

|||

:But the point is that the concept that the "School Server" will assign IP network address is not necessary and all this issue depends upon what kind of connection to the Internet will be provide. Here, in Peru, 250,000 XOs (with 50 XOs for average Andean community) means that we need to establish 5,000 "internet access point" (name them as you need: school servers, VSAT, leased lines, phone lines). In case of VSAT is the only case where we need to think about "assigning" IP network address... all the other cases are "no sensitive" because the IP address will be assigned by the Internet provider automatically by DHCP. --[[User:Peru|Javier Rodriguez. Lima, Peru]]</i> |

|||

Does the school server give out IP addresses to non-OLPC hardware? |

|||

If so, should the DHCP be linked to the mDNS name registry ? I.e. if a machine requests a particular name in the DHCP request, should this name be recorded in the mDNS registry for the subnet? |

|||

:IPv6 address assignment is magic; we may consider using the SEND protocol to ensure that routers are trusted. We will probably be using some form of temporary address in IPv6 for privacy reasons, which will require DynDNS or some other mechanism to allow friends to find each other. To protect kids' privacy, the solution can't be a permanent DNS name for each laptop; we need to more flexibly create task/address mappings so that friends can find each other for an activity without making themselves world-locatable. --[[User:CScott|cscott]] |

|||

:Things to think about: Mobile IPv6, IPv6 privacy extensions, SEND (secure neighbor discovery protocol). --[[User:CScott|cscott]] |

|||

Latest revision as of 14:44, 25 April 2013

These are services that the School server will provide. Additional services under consideration for deployment are listed separately. Service meta issues such as installation and management are discussed on School server specifics. A detailed description of the current implementation of these services is also available, along with Test Instructions.

Please help by adding links to existing pages discussing these topics, if you are aware of them

Library

The school library provides media content for the students and teachers using a School server. This content may either be accessed directly from the school library or downloaded onto the laptops. The content in the school library comes from a variety of sources: OLPC, OEPC, schoolbooks and interesting articles from all countries but most prominently the own country, the regional school organization, other schools, and teachers and students in the school. It includes both software updates and repair manuals for the laptop which should ideally be copies from http://wiki.laptop.org/.

A number of library attributes (self-management, scalability, performance, identity) are discussed in their own sections below. But it is clear that the library is not a single service, but rather a set of services (wiki or wiki+,http cache, bittorrent client, codecs, etc...) which combine to provide the Library functionality.

The library at its core is a "local copy" of a library assembled by the school's country and region, with help from OLPC. This set of documents is assembled on a country/region server, and is named a Regional Library. Each School server has a local library consisting of documents submitted by students and teachers using that server. This is named a Classroom Library. The collection of Classroom Libraries provided by the School servers at a particular school (there will typically be 2 to 15) is named a School Library.

Discovery

Basic bandwidth assumptions: there will be sporadic connections between XOs via the mesh, fast connections between XOs and school servers [SSs], possible sporadic connections b/t SSs and the internet at large via regional servers [RSs], and generally fast connections (at least fast downloads) b/t RSs and the internet.

Notion: XOs can maintain an index of materials seen on local XOs, school and regional servers; and can send requests for material over the mesh or network. School servers can maintain a larger similar index, collating requests for materials and evaluating whether they are likely to be fulfillable over the mesh. Those that aren't can be queued for request from regional servers or the internet.

- Indices would include a variety of useful metadata, including size, location, latency/availability (available via Torrent, FTP availability, estimated speed of connection), and rough content-based IDs.

- Servers and perhaps XOs would maintain basic statistics on what materials and types were most requested, with some special attention to requests for large files (which are harder to fulfill without saturating the network), and when nearby queues and indexes have been updated.

A reasonably accurate method is needed for keeping these indices mutually up to date, sending feedback back to requestors ("this material is 9GB in size; the request is unlikely to be fulfilled. please contact your network maintainer for more information."), and sending feedback to content hosts ("this file is very frequently requested. please seed a torrent network with it. <links>").

On the "internet at large" side of indexing are national and global libraries, metadata repositories such as the OCLC, global content repositories such as Internet Archive mirrors, and a constellation of web pages organized by various search-engine indexes [cf. open search, Google]. There is fast connection among these groups, and various categorization, discussion, and transformation of material (for instance, to compressed or low-bandwidth formats) can be done there in preparation for sending it back to the less swiftly-connected parts of the network. (For instance, one often scans high-bitrate multimedia on a local networks at much lower bitrates, edits the low-bitrate material, and sends the collected edits back to be applied to the original media high-power machines and over a high-bandwidth network.)

Scalability

A pre-determined subset of the Regional Library is mirrored in the School Library, the remainder of the documents are available over the Internet connection.

Caching of recently accessed documents will be done to provide the best user experience given limited connectivity, but it also has the effect of minimizing the load on the regional library. This will be performed by the transparent HTTP proxy.

While a peer-to-peer protocol may be used to help scale the Regional library, it must be sensitive to the fact that a large number of schools will have very limited upstream bandwidth and would not participate as peers. And while this protocol may serve well for mirroring content onto the school servers, using it for student accesses to the library would require a more complex client than a browser (see P2P Caching).

At the school level, there is some possibility of taking advantage of local connectivity to distribute the document cache among all servers in the school. Unfortunately, the exact piece of software we need hasn't been developed yet (see P2P Caching).

Good places to get ideas from are Andrew Tanenbaum's Distributed Operating System Amoeba with its transparent and performant Bullet File Server which could be expanded to a growing global distributed RAID file system that will never run out of storage using something like the ATA over Ethernet protocol or GoogleFS or possibly better.

Self-management

What is the mechanism for user submissions of media to the library? Teachers (and students) should certainly have a place to put resources they create where all can retrieve.

What about local wikis? Should they be supported on the School server? They are probably best replaced with a more function oriented information organization tool such Rhaptos or Moodle, and an online extension of the journal which supplants blogging.

Repository

Content centered, but allowing collaboration in building new content. See http://cnx.org for an example. The service is provided as open source software by the name Rhaptos. It's Python based.

Collaborative/Publishing/Learning Management System

Moodle could be an option? http://moodle.org

- Exchanging published work with other communities could be done using a USB key, taking a class portfolio (using moodle pages) to another village. Distance collaboration can also be done in an asynchronous way, where each school has its page or set of pages in Moodle, and it would work like standard mail, travelling by land to another school, kids contributing, commenting, peer-assessing, and then back again.--Anonymous contributor

A Mini-Moodle Plan

- These draft notes from Martin Langhoff, Moodle developer (14/03/2007): we are keen on helping prepare / package / customise Moodle for deployment in this environment, and have experience with Debian/RPM/portage packaging and auth/authz/sso. Actually, I lurk in laptop-dev and sugar-dev keen on discussing this further. Right now reading up on Bitfrost.

There's been some private correspondance between Moodle devs (MartinD, MartinL) discussing an overview of what the main work areas for Moodle on XS would be:

- Packaging (RPM?)

- Provide an out-of-the-box config that Just Works

- Integration with auth / sso (Bitfrost)

- Integration with group / course management (Is this too structured ;-) ?)

- Replace mod/chat and messaging infrastructure with mod/chat-olpc that hooks into Sugar chat/IM

- (maybe) Replace mailouts with OLPC infrastructure for email

- Work on an XO theme and UI revamp

(MartinL is happy to help on all except the theme/UI, due to sheer lack of talent)

That's the basics of getting a basic integration in place. The fun work will be in creating activity modules that make sense in a XO environment.

- Maybe this should be moved to a subpage?

- just thought I'd let you guys know that there is a project run by the OU (UK) to get an Offline version of Moodle developed. It might be something that you guys could use and contribute to as it sounds like our purpose overlaps with yours. You can find out more at http://hawk.aos.ecu.edu/moodle/, just sign up to view the course, and lets see how we can make the two projects help each other. In particular from the list above we are focussing on providing options 2,3 and 4 so our work may provide a reference for yourselves. Colin Chambers 13:50 03/12/2007

Bandwidth Amplification of Local Content

This refers to the problem of supplying content posted to a School Library to a large number of other schools. This is a problem due to the limited bandwidth available for uplink from a typical school. Unfortunately, the architecture most useful for nonprofit content distribution --- peer-to-peer (P2P)--- is not well suited for use by school servers using highly asymmetrical (DSL, satellite) network connections.

One solution is a well-connected server with a large amount of storage provided at the regional or country level which will mirror the unique content from each school. This has the dual purpose of backing up said content. P2P access protocols may be used to reduce the load on these (seed) servers making use of any regional schools with good uplink connectivity.

- Here are some thought on solutions and challenges in this area. Make sure that the upstream bandwidth is fully utilized all the time. That will require some prioritization so that active user traffic (e.g. web browser requesting a page) goes first. After all "real time" user traffic is sent upstream, the XS can use available bandwidth to push uploads of other material. The basic idea is that schools upload large files overnight and the interactive traffic generated directly by XOs goes ASAP. This may require space on the XS where files going upstream can be stored while awaiting upload. It also needs support for file transfers to start, stop, and then resume where they left off.

- The second basic point is to compress before uploading. There's a trade off between CPU utilization vs. BW utilization (i.e. compressing every packet can bog down the XS CPU) so it probably make sense to only compress large non-real time uploads.

- The core challenge may be how to implement this without breaking common applications. For example, you want users to upload content to any HTTP based app (wiki, BLOG etc.) and those may not be hosted on the school network so you have no control over them. Therefore, they may not support a start, stop and resume upload paradigm. One idea is to intercept all HTTP Post messages on the XS. Those over a certain size get passed to a regional server. Once the regional server recieves the full post message with its data, the regional server re-initiates the HTTP Post and rapidly completes the transaction. That will require some kind of caching or spoofing code on the regional server.

- That complexity could be avoided by constraining large uploads to school system hosted servers. However, it may still require re-write of the hosting applications. e.g. uploads to a school hosted wiki can be intercepted by the XS then the XS does a remote copy (rcp) or FTP upload or whatever to the right directory on the wiki and the content is available to everyone. That requires a wiki which allows posting of content by just placing it in the right directory. May not be trivial but that could be a much easier problem to solve then trying to spoof HTTP Posts.

- I hope that's not too general a comment for this page and I hope it makes sense. In short, if you only have a small amount of upstream bandwidth, use what you have all the time and make every bit count.

--Gregorio 16:04, 7 December 2007 (EST)

Security and Identity

The Library uses the identity convention introduced by Bitfrost. When a laptop is activated, it is associated in some way (TBD) with a school server. This is the School server hosting the Classroom Library that a student is allowed to publish onto. Students not associated with a Classroom Library only have read permissions on the Library.

Backup

According to this description of the Journal, it will provide automatic backup to the School server, with a variety of restore options.

What are the plans for providing additional storage to users of the XO laptops? How does the Journal handle filling up the available storage on the XO? allocated storage on the School server?

Network Router

The School server is first and foremost a node in the wireless mesh which provides connectivity to the larger internet.

A starting assumption is that it is largely a transparent router. It does not perform any network address translation, and very little packet filtering. It will perform bandwidth shaping to ensure fair access to the internet.

- Note: March 2, 2008.

- In Peru it will be imposible that the School Server behaves as a transparent router, because:

- a) The most search word in Peru is sex (I have develop Internet commercial networks and ISPs in the middle of the 90's. My personal findings are the same that other studies have shown). There is law in Peru that put responsability for seeing pornography in the shoulders of the owner or administrator of the Internet Cafe. For law purposes all the groups of XOs + the School Server + the teacher in charge of the whole system will be considered as an "Internet Cafe". Some kind of "porno" filtering must be provided. Penalties for the responsibles are jail, every "internet cafe" in Peru must provide a "safe" zone for children (special computers with strong filtering). That is the law.

- b) There are enough IP numebers in the IPv4. But for Peru getting the IP numbers is a cumbersome task. 1st hands experience: not impossible but it is something that the big telecoms have absorved a long time ago. American authorities in charge of assigning IP blocks tell us: "ask your telecoms, they have a ton of IP numbers that are not in use". Normally Telecomos (THE telecom) here in Peru has not been happy with Internet access that doesnt use their infraestructure (they will not provide the VSATS... so they will no have interest in helping with IPs for a "foreign" network... they will tell us to get IP numbers from the VSAT provider).

- c) Public IPs are exposed to Internet Attacks (like DOS, Denial Of Service).

- d) It will be mandatory to "block" some protocols (to avoid innecesary use of the bandwidth that will turn the project as a short life idea), like the special video protocols (no bandwith/money can support 100 XO computers asking different videos). In extreme cases a cache system or a filtering of the http protocolo can be establish to avoid big images (totally posssible) or downloading movies (possible) or seeing sites that use flash (nothing against flash, just an example)... or to avoid any other content that is "heavy". In old internet times we were able to download a big file... but it arrive to us "chunked", we have to "rebuild" the big file in our computers. Since there will not be enough bandwidth in Peru we must analyze the issue and present solutions to these and similar situations.

- In my opinion, there is need for a NAT (Network Address Translation) to avoid some of this problems. At the same time the School Server can provide "caching" service to make an efficient use of the always scarce internet bandwidth that the schools will get.

- All this goes in opposite sense to what we can think we want for the children: freedom of choice, direct access to the Internet world (each XO with its own public IP... and the child able to mount an Internet server if he/she wants (and knows how to do it... they will know for sure!). So the final question is how much liberty are we going to give to the end user (kid/young people) in charge of his/her XO ?

- Javier Rodriguez. Lima, Peru. info@olpc-peru.info --Javier Rodriguez. Lima, Peru

- DSL, and sattelite, and UMTS and GPRS for that matter, are scarce commodities that you still have to pay royalties for as there are monopolists. The bandwidth could be greatly expanded if we managed to produce cheap waterproof datawashlines: thin copper telephone cords containing low voltage power and wireless and USB ethernet repeaters every 100 meters or however much it takes. These lines could be cheaply and quickly rolled out along train tracks, subway tunnels, thru lakes, rivers and oceans, alongside roads and paths, thru forests to the most remote of villages or cabins or points of sight. The routers would be self-learning to automatically connect every device's ethernet adress to all others over a most often redundant number of routes.

- For Peru: All networking to reach the main Internet must be done using wireless or ANY other alternative method, it is not possible to use cooper or any other "land" fiber. If the XO computers are installed in the poorest part of the country (over the 4,000 meters or 12,000 feet altitude) then you can drive a car by 3 hours and you will not find another community or town... and you can drive a car by half a day to reach the nearest bigger town that have regular electricity and some kind of Internet connection (normally by a Internet Cafe o "cabina internet"). By the way, this "Internet Cafe" that we will find as the nearest place were there is Internet will have computers with Windows systems. Finding one with Linux will be a huge task. Nope, they will not install any kind of Linux on their computers (it has been tried before at national level, and all the peruvian linux users know that it is not an easy task to teach/show/pass the voice about the nice features of Linux systems). Finally we, OLPC, are an educative project not a computer project so we are not attached to any hardware or software (or we are?). Distances are huge: no cooper lines can be used... wireless are a better option... radio (old radio short frecuency) should be studied... and "mule" systems must be evaluated (we are studying the issue here). --Javier Rodriguez. Lima, Peru

- Providing IPv6 connectivity from the mesh to the internet will require the server to provide a tunnel over IPv4 to the closest IPv6 network, since most sites will not be providing direct IPv6 connectivity. --cscott

- IPv4 is doomed to be completely replaced long before the 32-bit Unix clock turnaround in 2036 and we should not worry about it too much but create incentives to upgrade to IPv6 and support IPv4 only sparingly.

HTTP Caching

The only packet filtering proposed is a transparent proxy on port 80, which will allow a caching of commonly accessed HTTP (web) content to occur locally. This will reduce the load on the internet connection, as well as the response time seen by a user. This may be relied upon to implement the School library.

- Again, due to IPv6/IPv4 interoperability issues, the proxy can't be transparent, unless we're willing to do NAT for the laptops to give them a routable IPv4 address. --cscott

The problem here is availability of HTTP caching software. The most popular open source provider, squid, has IPv4 and IPv6 support from version 3.1, and can be built without many of the additional features where not needed.

- There are other HTTP caches, that are less overengineered and easier to configure than Squid, most prominently Roxen, Apache and wwwoffle, a new slim one would be easy to write in perl or possibly python.

A more intelligent caching/redirection scheme may be provided by CoDeeN. Unfortunately, it is built on top of commercial proxy software.

And a third option, (actually potentially related to CoDeeN), is Vivek Pai and Anirudh Badam at Princeton have done some work on a light weight caching proxy (HashCache) that may become suitable for OLPC at some point in the future.

- "Caching service" can be install with a different aproach: not a "big" server that will do the caching. ALL the XOs must do some caching and every XO must "talk" with their neighbors (other XOs) to see if they have the information in their memories (USBs or Flash or any other kind of memory that they have in that moment install). This colaborative aproach between the XOs can be so usefull that there is a posibility that ALL the local network becomes the BIG REPOSITORY of the information. It is a matter of design and returning to the principles of the Internet: we are all pairs, we are not end users of no one, we are pairs. We are "cells" of the big brain but at the same time WE ARE the big brain --Javier Rodriguez. Lima, Peru

See also Server Caching

Name Resolution and Service Discovery

The School server will use and support the use of Zeroconf techniques for device name declaration and service discovery.

It should also support traditional DNS by exporting the mDNS name registry.

- mDNS has some scaling issues which do not (yet) seem to be resolved. In the IPv6 world, we'll try to use Router Advertisement and well-known IPv6 anycast addresses to provide services without the need for explicit discovery protocols. --cscott

- Also, there may be some issues with trying to support a domain in both traditional unicast DNS and mDNS. While some of the following criticisms by the Avahi team of sharing a domain between DNS and mDNS could potentially be mitigated by a programatic interface and the use of a distinct domain name, others (notably the critique about leaking link-local information) should give pause to anybody considering this. --@alex 23:39, 9 February 2008 (EST)

- Please remember, that we do not recommend using nss-mdns in this way. Why? Firstly, because the conflict resolution protocol of mDNS becomes ineffective. Secondly, because due to the "merging" of these zones, DNS RRs might point to wrong other RRs. Thirdly, this can become a security issue, because information about the mDNS domain .local which is intended to be link-local might leak into the Internet. Fourthly, when you mistype host names from .local the long mDNS timeout will always occur. Fifthly it creates more traffic than necessary. And finally it is really ugly.

Bandwidth Fairness

The School server should implement a bandwidth fairness algorithm which prevents a single user from dominating the use of the internet connection. The problem is that the short-term fairness algorithms used by TCP give P2P software equal consideration as users trying to access a document over the web. By biasing the queueing algorithm based on usage over the past 4 to 24 hours, we can protect the random browser from the heavy downloader.

The problem is that due to our mesh network, we may really be penalizing a remote classroom relayed through a single node.

We could bias the bandwidth allocation based on remote port (e.g. favoring web access to port 80), but this solution seems less than optimal.

- Proposal: Every XO (every Fedora/Sugar installation) must have a very small program, this program (named TinyProxy or SelfProxy) has only one purpose: keep accounting of how many megabytes of information the user has request from the Internet. Every teacher (in his XO computer) will get (automatically) the information coming from the 20,30 or 100 TinySelfProxies (installed) on each computer. According to the kind of task, the use of the Internet that has been done, the available bandwidth (or any other method that they use to reach the Internet), the teacher can assign more "rights" (like money... every kid get "start money"... "time is money" you remember this saying...)... kid that has spend "his money" (slash time periods or "downloaded" kilobytes) will have to borrow "time" from a friend or think better next time when he receive his/her assigned "time". Programming this small and innocuous (?... the teacher will be the "big brother", eh?)... small program can be done in any language that support TCPIP protocols. Since the boys (and girls) will have root access they will be able to replace and modify their "tiny - self proxy" programs... but the teacher will FEED new ones... the database (small text record) that have the story of the use of each kid... is located in every XO... and it is encrypted so any modification will be an alarm for the teacher that will know that something is happening (an error on the system or a "wise" kid!). --Javier Rodriguez. Lima, Peru

Dynamic IP Address Assignment

The school server will take responsibility for assigning IP network addresses to device on subnets it is routing.

- Strong opinion here: It is totally possible that a network of XOs work isolated (normally they will do it in "isolation" mode... because of huge distance... and huge cost of the Internet access... in Peru that will be the normal scenery). I think that every local network must work with a private address (192.168.*.*) and all of them will "look" for a 192.168.1.1 (reserved address for the "School server" or any other way to reach the Internet). The "School Server" will have this "private networks" addres and at the same time (by DHCP) or fixed addressing will have a "public" internet address to connect to the Internet (this last issue depends on the answer to this question:

- How the "School Server" will have access to the Internet?

- By one local (national) provider?

- Connecting to the OLPC network by VSAT (satelite) connection

- By "mule" systems (storing TCP/IP requests and traveling to the nearest "internet connected" point

- 2 o 3 other ways to reach the net.

- But the point is that the concept that the "School Server" will assign IP network address is not necessary and all this issue depends upon what kind of connection to the Internet will be provide. Here, in Peru, 250,000 XOs (with 50 XOs for average Andean community) means that we need to establish 5,000 "internet access point" (name them as you need: school servers, VSAT, leased lines, phone lines). In case of VSAT is the only case where we need to think about "assigning" IP network address... all the other cases are "no sensitive" because the IP address will be assigned by the Internet provider automatically by DHCP. --Javier Rodriguez. Lima, Peru

Does the school server give out IP addresses to non-OLPC hardware? If so, should the DHCP be linked to the mDNS name registry ? I.e. if a machine requests a particular name in the DHCP request, should this name be recorded in the mDNS registry for the subnet?

- IPv6 address assignment is magic; we may consider using the SEND protocol to ensure that routers are trusted. We will probably be using some form of temporary address in IPv6 for privacy reasons, which will require DynDNS or some other mechanism to allow friends to find each other. To protect kids' privacy, the solution can't be a permanent DNS name for each laptop; we need to more flexibly create task/address mappings so that friends can find each other for an activity without making themselves world-locatable. --cscott

- Things to think about: Mobile IPv6, IPv6 privacy extensions, SEND (secure neighbor discovery protocol). --cscott